Agentic AI: A Paradigm Shift in AI Applications

We are entering an era of rapid AI expansion, essentially a process of iterative technological paradigm shifts that continually open new application scenarios. Today, large model-powered AI agent technology is ushering in a profound paradigm change—from tool-based AI to Agentic AI (autonomous agents). With a closed-loop of planning, perception, decision-making, and execution, Agentic AI is transforming autonomous networks from manually driven to agent-led systems.

AI Paradigm Shift: From Tool Execution to Autonomous Execution

AI agent applications are evolving from tool orchestration and execution to agent systems that can act on behalf of users—undertaking complex tasks, making decisions, and adapting to ever-changing environments.

Large Models Reshaping the Cognitive Foundation of Agents

Large language models (LLMs), pre-trained on vast amounts of data, establish a general world knowledge base. They can understand complex contexts, tackle cross-domain tasks, and demonstrate rudimentary reasoning and creative capabilities. As such, they form the cognitive foundation of agents, which can be summarized in three dimensions:

- Universal intelligent base: The LLMs act as the agent’s "brain," providing understanding, reasoning, and generation abilities.

- Environment perception and action: Agents actively interact with their environment through APIs, sensors, and tool interfaces.

- Goal-driven and evolutionary: Based on preset goals, agents plan execution paths, dynamically adjust strategies, and continuously optimize through feedback.

In this paradigm, AI shifts from being merely a "tool" to becoming an intelligent agent capable of independent decision-making, long-horizon operation, and dynamic evolution. Its operational logic follows a task-goal loop: the model is invoked to make decisions, tools execute accordingly, and results are fed back to the model until termination.

LLM-based agents can autonomously decompose tasks, invoke toolchains, and dynamically adjust strategies, creating a closed-loop decision-making system. This "goal-driven, autonomous planning" model marks a fundamental shift in AI applications—from functional modularization to agent-centricity. Gartner predicts that by 2026, more than 80% of enterprises will deploy AI agents to restructure business processes, achieving efficiency gains of 40% to 60%.

AI Agents Powering a New Model of AI Production

Current LLM providers, such as OpenAI, Anthropic, Alibaba, and ByteDance, are transitioning from providing single API outputs to building agent ecosystem platforms. Microsoft, for example, has launched Copilot Studio, allowing enterprises to customize exclusive agents and integrate internal data and business processes. ByteDance has rolled out Cozi and Cozi Space as platforms for internet-based AI agents and enterprise applications.

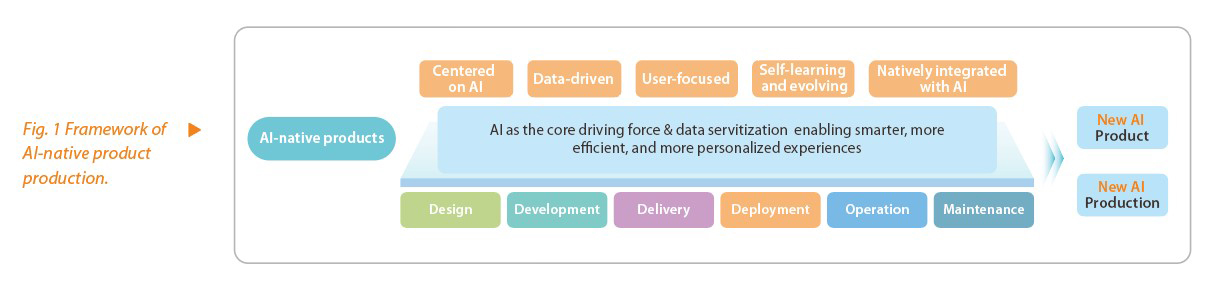

The widespread adoption of AI agents is fueling the growth of rapid AI application development platforms, which are becoming central hubs for AI value creation. The new model of AI production centers on interactive AI agent design, end-to-end rapid development, delivery, deployment, operation, and integration within the AI ecosystem (see Fig. 1).

Agentic AI Evolution: From Passive Response to Active Adaption

AI is entering a new agentic stage, evolving from passive instruction execution to active learning, adaptation, and optimization. Through self-iteration and interaction with the environment, such systems can dynamically adjust their strategies to achieve goal-driven, continuous evolution.

As defined by Anthropic, LLM-based agents can autonomously direct their own actions and tool usage, maintaining control over how tasks are accomplished. Although still in a transitional phase, Agentic AI has seen milestones—such as the release of Manus (March 6), the launch of AI Superframe by Quark (March 13), the introduction of Agent TARS by ByteDance, and the booming popularity of Genspark. These developments signal a shift from "tool executors" to highly intelligent entities capable of goal-oriented collaboration and active evolution.

Agentic AI Working Mode: Target-Driven Multi-Agent Coordination

To understand the revolutionary changes brought about by the working mode of Agentic AI, it is useful to compare it with traditional workflows.

Take the search scenario as an example—this transformation is particularly evident—as it not only reconstructs the technical implementation path but also redefines the underlying logic of human-machine collaboration. A typical Agentic AI search process might proceed as follows:

- A user raises a query, and the agent analyzes and decomposes it to infer true intent.

- If the query is ambiguous, the agent proactively seeks clarification from the user.

- It then selects the appropriate search method—general-purpose search or specialized data sources depending on context.

- Finally, it integrates the search results and output them in a way aligned with the user's intent.

The LLM continuously learns from search processes and history, enabling the agent to autonomously determine search directions and strategies. Each decision and inference is clearly logged, achieving a degree of interpretability.

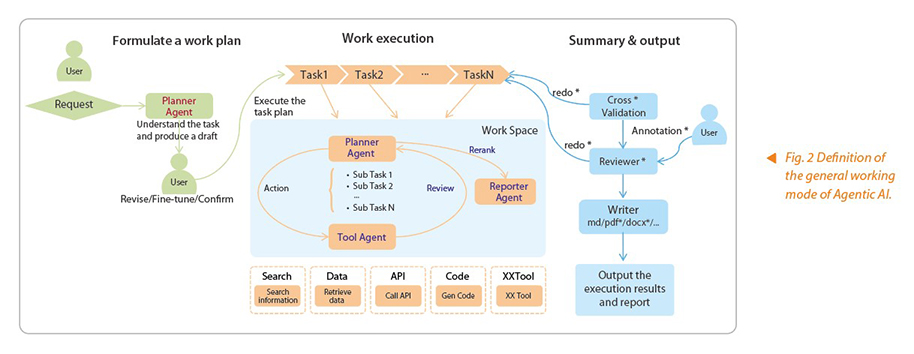

This case demonstrates that, compared with traditional rule-based or workflow-concatenation approaches, Agentic AI shifts from static workflows to dynamic decision-making. As shown in Fig. 2, tasks are completed through the collaboration of multiple agents within a full-stack closed loop comprising planning, decision-making, execution, and evaluation.

Agentic AI has achieved breakthroughs in three key dimensions:

- Goal-driven dynamic planning: Free from preset workflows, it decomposes tasks and adjusts strategies based on real-time environments.

- Closed-loop self-learning and optimization: It improves decision-making paths through continuous feedback and learning, without manual tuning.

- Intelligent resource integration: It proactively invokes heterogeneous systems (e.g. APIs, databases, toolchains) instead of passively awaiting instructions.

Application Scenarios of Agentic AI

We extend the Agentic AI paradigm to autonomous networks, where its impact is equally significant. In wireless access networks, with fault handling and network optimization as two examples, we illustrate a closed-loop system under the Agentic AI paradigm, encompassing perception, decision-making, execution, evolution, enabled by data sharing, strategy linkage, and task relay.

Troubleshooting Coordination: Automated Closed-Loop Work Orders

In the event of a sudden service interruption at a gNodeB, the system automatically executes the following steps:

- Fault perception: The fault-management agent detects in real-time that the RRC connection success rate has dropped from 99% to 72%, accompanied by a 6 dB decrease in the average signal-to-interference-plus-noise-ratio (SINR), triggering a Level-3 alarm.

- Root cause diagnosis: The agent queries the knowledge graph, matches the historical case database, and invokes APIs, commands, and tools for data inspection and root cause diagnosis, ruling out hardware faults (equipment health indicators are normal). It primarily provides intelligent resource integration.

- Fuzzy analysis: Based on the topology spanning the base station, transmission, power and environment monitoring, and the core network, the agent identifies co-channel interference caused by deviations in antenna downtilt of adjacent cells, showcasing goal-driven dynamic programming.

- Automatic repair: The agent provides repair plans, executes automated actions, and prompts users to confirm high-risk operations that may affect services.

- Dynamic parameter adjustment: The agent executes a command to correct the antenna downtilt angle of the faulty base station from 8° to 12°, reducing coverage range.

- Result feedback: The agent checks the outcomes of parameter adjustments and initiates indicator monitoring.

- Indicator verification: The connection success rate rebounds to 98%, and the SINR returns to 18 dB.

- Automatic work order report generation: The report is automatically delivered to O&M personnel through the mobile terminal and marked as “No manual intervention required.”

- Self-learning optimization: The results are stored in the agent's shared memory. Using reinforcement learning, the agent extracts scenarios, rules, and major diagnoses and localization actions from the repair process for future cases, enabling closed-loop self-learning.

Optimization Coordination: Predictive Network Optimization

During a large-scale event, the network is affected by a surge in traffic. The system responds as follows:

- Joint prediction (24 hours before the event): The optimization agent predicts a 10-fold traffic peak around the venue, while the fault management agent pre-checks and confirms that the load margin of nearby base stations is insufficient.

- Dynamic pre-configuration (two hours before the event): Agents mainly provide intelligent resource integration. The optimization agent activates dormant cells to expand network density, while adjusting the QoS policy to prioritize video bandwidth. The fault-management agent simultaneously initiates a system health check and performs stress testing on the capacity-expanded base stations to rule out hardware risks.

- Network optimization (during the event): When the instantaneous number of users exceeds the forecast by 15%, the optimization and fault-management agents respond collaboratively. The optimization agent initiates spectrum offloading, while the fault-management agent monitors CPU temperatures and dynamically restricts non-urgent services to ensure system stability. At this stage, agents mainly demonstrate goal-driven dynamic programming capabilities.

- Post-event notification: The agent automatically generates a network quality report and pushes it to users via SMS or app, stating "The average user rate during the peak period was 52 Mbps, with a compliance rate of 97%".

Design and Technologies of Agentic AI

Agentic AI Design Paradigms

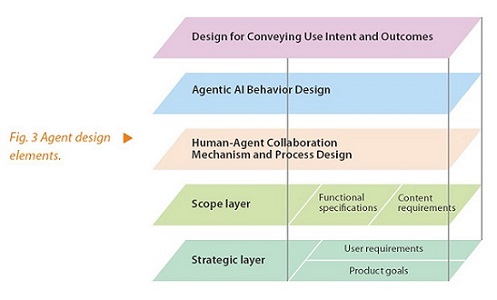

The design of Agentic AI is evolving towards user-adaptive experiences, focusing on three key levels, as shown in Fig. 3.

- Conveying user intent and outcomes: Dynamically map user intent and system responses through interface elements to provide a visual closed-loop of operation paths and feedback.

- Agentic AI behavior design: Incorporate anthropomorphic feedback and expectation management to demonstrate the interpretability, controllability, and human-like behavior patterns of AI agents.

- Human-AI collaboration mechanisms and processes: Focus on bidirectional adaptability between humans and AI by building an evolvable collaboration framework with dynamic role allocation, context awareness, transparent decision-making paths, and progressive trust building. This enables AI agents to proactively predict needs, explain behaviors, and accept interventions, ultimately supporting seamless task collaboration.

Core Technologies of LLM-Based Agents

The more an agentic system relies on LLMs for behavioral patterns, the higher its autonomy. LLM-based Agentic AI is centered on four core modules: planning, memory, tools, and perception.

Key technologies of the planning module include:

- Hierarchical task reasoning: Perform global planning based on LLMs, integrating mind maps with reinforcement learning to achieve multi-level goal decomposition and dynamic path optimization.

- Causal reasoning enhancement: Integrate structural causal relationships with empirical data to predict the long-term impact of actions and avoid short-sighted decisions.

- Multi-agent collaboration: Leverage techniques such as real-time multi-agent collaboration, shared memory, and multi-agent confrontation to support task allocation and conflict resolution.

Key Technologies of the memory module include:

- Long-term memory compression: Store key historical information and realize efficient retrieval and association via the self-attention mechanism.

- Short-term memory management: Cache dialogue states and environmental contexts to maintain coherence in multi-turn interactions.

- Knowledge distillation and update: Dynamically expand external knowledge bases through continual learning or retrieval-augmented generation (RAG) to avoid model hallucinations.

- Dynamic enhancement of memory: Construct a dynamic memory bank to store interaction trajectories and apply the attention mechanism to extract key experiences, enhancing memory dynamically.

Key technologies of the tool module include:

- Automatic tool discovery and usage: Automatically construct tool calling methods based on semantic embedding matching of tool description texts. As the real world is open and dynamic with new tools, APIs, and data sources continuously emerging, an intelligent system must generalize like humans by understanding functional descriptions of new tools and incorporating them into its own capability set.

- Secure sandbox verification: Pre-execute high-risk operations (e.g., network requests) in a restricted environment (e.g., a Docker container), and return results to the main process only after verification.

Key technologies of the perception module include:

- Multimodal information fusion: Utilize cross-modal representation alignment to uniformly process text, image, and speech inputs to construct environmental state representations.

- Dynamic environment modeling: Predict environmental changes through a world model to help agents anticipate the impact of actions.

- Active perception and attention control: Optimize perceptual focus with reinforcement learning, prioritizing high-value information (e.g., user preferences and habits in conversations).

The Evolutionary Outlook for Agentic AI

AI agents and LLMs are advancing in a mutually reinforcing manner and evolving at a fast pace. In certain domains, they have already approached or even surpassed human experts. We must innovate boldly, while allowing time for the technology to be validated in practice.

Technically, AI is progressing from perceptual intelligence to autonomous decision-making, and multimodal integration will enable agents to interact with the physical world. In application, deployment will begin in finance and medical care before expanding to other vertical fields. Socially, human-AI collaboration will reconstruct workflows and drive new ethics and value frameworks. The design approach is shifting from a "Reason + Tool" model to a "Learning + Reason" model.

Ultimately, Agentic AI will bring a comprehensive upgrade to human cognition, collaboration systems, and values—ushering in a new chapter in human development.