AI Opening a New Horizon of Energy Saving for Core Networks

With increasing global focus on environmental protection, the telecom industry faces growing pressure to transform. Industry organizations agree that green, low-carbon development is key to future networks. The core network, as the network’s brain, plays a vital role in energy saving and emission reduction. Given the large number and diversity of core network NEs, it consumes substantial resources and is significantly affected by service tidal effects. This presents both opportunities and challenges for energy conservation. The introduction of AI into the core network for energy savings is a key enabler of green transformation.

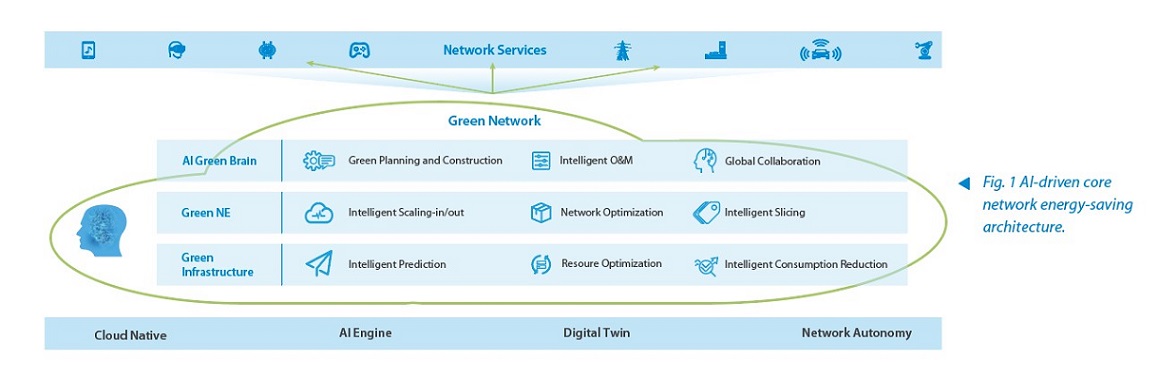

The AI-driven energy-saving architecture of the core network (Fig. 1) centers around the AI green brain. The AI green brain collects data from infrastructure, cloud-based networks, and O&M systems, analyzes it, and dynamically generates energy-saving policies to support global, collaborative energy savings. Through continuous feedback, it evaluates and adjusts the energy-saving effects, ensuring that network energy savings are achieved while meeting service level agreement (SLA) requirements.

Infrastructure: AI-Driven Optimization and Energy Saving for Resource Pools

For a core network based on NFV architecture, the infrastructure has evolved from dedicated equipment and dedicated platforms to various servers and cloud platforms. Control-plane NEs are centrally deployed on universal servers, while the user plane is deployed at various edge nodes as required. At the infrastructure level, energy-saving efforts focus on managing server and cloud platform energy consumption. Current server hardware adopts energy-saving technologies such as efficient heat dissipation, efficient power supplies, and heterogeneous acceleration. In the future, technologies such as integrated storage and computing will be introduced to further improve the computing energy efficiency ratio.

At the software level, AI technology can improve energy efficiency in multiple dimensions.

- Intelligent prediction: By collecting performance indicator data and applying AI models, the intelligent prediction module predicts service trends and identifies busy/idle periods from a global perspective, guiding capacity planning and resource pool scaling.

- Intelligent optimization: The intelligent optimization module leverages intelligent analysis, capacity prediction, and user trend prediction to proactively adjust resources. Dynamic resource scheduling consolidates fragmented resources, reducing fragmentation. In addition, based on service tidal pattern predictions, different NEs are deployed in a hybrid manner to enable unified scheduling of multiple services within the resource pool, thus reducing peak loads and improving overall resource utilization.

- Intelligent consumption reduction: The intelligent consumption reduction module dynamically manages energy consumption based on AI-driven optimization policies. For example, it automatically shuts down unnecessary hardware devices and idle cores, and reduces CPU clock speeds for platform-level energy conservation.

Green Network: Intrinsic Intelligent Algorithms Drive Resource Optimization and Energy Saving

With intrinsic intelligent algorithms, core network NEs can evaluate device status based on service load, user online rate, data throughput, and the status of surrounding NEs. This enables the implementation of policies such as automatic scaling, dynamic service scheduling, and automatic CPU frequency adjustment to optimize resource utilization.

- Intelligent scaling: During network operation, the system evaluates and predicts the resources required by the current bearer services of NEs based on historical traffic and capacity expansion requirements, and adjusts capacity accordingly. When traffic increases, computing resources are automatically added; when it decreases, excess resources are reclaimed. In addition, the resources occupied by service components in the core network are dynamically adjusted. When the service is busy, the number of CPU cores occupied by the components is increased; when idle, the CPU core frequency is decreased first, with further adjustments made until the cores are shut down to save energy.

- Intelligent network optimization: AI optimizes the core network’s topology and routing policie to reduce redundant data transmission and unnecessary signaling interactions. For example, an intelligent routing algorithm is used to select the optimal data transmission path, reducing hops and delays, improving transmission efficiency, and lowering energy consumption.

Green Brain: SLA-Based Intelligent Energy Consumption Evaluation and Optimization

Energy savings must be aligned with service SLA requirements. By monitoring real-time operational status and simulating historical data, the AI green brain builds a resource usage model that accurately reflects actual conditions and establishes a resource consumption simulation system, enabling the prediction of service and energy consumption trends and intelligent evaluation of resource and energy usage. A resource trend model is established to align optimal resource allocation with current service demands, complete the optimized deployment of network services, ensure SLA compliance, and balance resource consumption.

- NE-level tuning: NE-level microservice components adopt strategies such as self-sleep, intelligent NE scaling, and dynamic pool migration to reduce system energy consumption. Intelligent switching between energy-saving modes during busy and idle states is enabled through NE traffic-based energy consumption awareness.

- DC-level tuning: At the data center level, energy-saving policies include equipment frequency reduction, sleep mode, power-off, and power control based on resource status. Dynamic and transparent service migration across the entire DC network enables intelligent resource defragmentation, preventing the continuous emergence or spread of resource holes.

- Slice-level tuning: Energy consumption of core network sub-slices is optimized through resource-saving policies of the core network, and can be coordinated with radio and transmission sub-slice optimization to achieve end-to-end energy efficiency at the slice level.

ZTE has applied green, energy-saving design to core network products across planning, construction, and maintenance and has steadily improved energy efficiency. Recently, ZTE cooperated with a Chinese telecom operator to successfully complete the industry’s first commercial pilot of intelligent 5G UPF power saving, achieving a 7%–15% power reduction without affecting service KPIs or user experience. Looking ahead, ZTE will leverage new AI model capabilities to drive further innovation in core networks, explore new energy-saving solutions, and support the dual-carbon goal.