Cloud Native: The Application Architecture for Next-Generation Virtual Core Networks

The Evolution of CN Application Architecture

Currently, network operators provide users with voice, SMS, and data services through proprietary hardware and software in their core networks. In traditional core networks, the non-sharing of hardware resources results in inflexible deployment; the closed design of software architectures results in poor scalability; and the independent deployment of stovepipe services results in complex operations and maintenance (O&M).

As emerging IoT services are booming and 5G speeds up its commercialization, new requirements for core networks have been raised such as massive connections, flexible deployment, scenario adaptability, and innovative service capabilities. SDN and NFV are considered in the industry as the key technologies of a core network in the future.

The core network architecture is designed to meet the requirements of flexible NE deployment, open service capabilities, and 5G network slicing. By adhering to microservice, DevOps, and other IT design ideas, cloud native is known as the main architecture for designing and developing core network applications in the future.

Key Technologies of Cloud Native

The design of core network application architecture should consider the stability requirement specific to telecom applications and introduce many ideas from the IT industry for its own optimization and improvement. Cloud native core network applications have the following four features:

Microservice

An application is built at the granularity of a stateless microservice. Microservices are designed upon the concept of high cohesion and low coupling. Microservices communicate with each other through API or a unified message bus.

Information about user access and session is all stored on the data sharing layer. Although located at different places, each microservice instance can obtain the latest user status through the data sharing layer.

Based on the above design concept, each microservice instance can run, scale up and down, and upgrade separately. The distributed deployment of microservices can also improve application reliability.

Automation

Cloud native applications should be highly automated in multiple phases involving blueprint design, resource scheduling and orchestration, lifecycle management, status monitoring, and control policy update. These phases dovetail with each other through a closed-loop feedback mechanism. This can achieve one-click deployment, full autonomy, and high-effective management. The automated platform enables users to agilely design and quickly deploy NEs and networks for featured services.

Lightweight Virtualization

Compared with a traditional virtual machine (VM), the container virtualization technology provides high scalability, dense deployment, and high performance. The technology is rapidly developed and widely used in the IT industry. In the design of cloud native application architecture, application components must be deployed upon the container virtualization technology. This can improve resource usage and achieve quick service delivery and agile application maintenance.

In an actual deployment, cloud native applications and underlying virtualization technologies are decoupled. They can be deployed in a mixed container/VM environment.

DevOps

Programmability based on telecom network capabilities is essential to service innovation and ecosystem enrichment.

The application capabilities of a core network can be open to a third party through the API/SDK of a microservice for further development and service innovation. A virtualized operation platform can also provide friendly tools and environments for sustainable development and delivery. In this way, developers can develop, release, and upgrade services in the DevOps mode.

Cloud Native Applications

Helping Operators Transform Network Functions

● Quick delivery and short TTM: Traditionally, a waterfall R&D model must involve requirement acknowledgement, coding, unit test, integration test, and function release. Each module is tested in series, which requires a long time to wait and results in low R&D efficiency. Agile development focuses on development optimization, but has no consideration of O&M. In the microservice mode, service functions can be modified or added at the granularity of a microservice. This reduces the range of revisions, enables microservices to be shared and called by different applications, and avoids repetitive development for different NEs. In the DevOps mode, streamlined tools are used to support large-scale, concurrent, and continual development, and to reduce waiting time. If a requirement is adjusted, quick modifications can be made to achieve continual delivery. A cloud native application can shorten a version release cycle from months to weeks or days to quickly fulfill the needs of operators in an iterative mode.

● Capability openness for service innovation: In a traditional core network, NEs are implemented as defined by standard organizations such as 3GPP, service types are limited, and the advantages of communication pipes cannot be fully leveraged. When coding a program, a third-party developer can call a microservice from a cloud native application through API/SDK. Through the microservices, service applications are bundled deeply with operator network capabilities. Operator capability openness can also serve as a mass innovation platform that can attract more third parties to participate in telecom service innovation. This enriches the ecosystem and creates new types of telecom services.

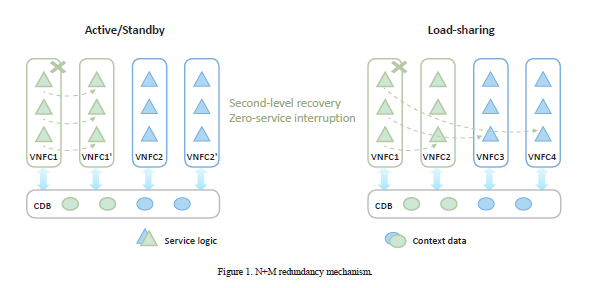

● Stateless design to enhance application reliability: How to achieve carrier-class reliability (99.999%) through COTS hardware has become a heated topic around NFV. ZTE’s core network applications can achieve carrier-class reliability (99.999%) through its stateless, distributed, and N+M redundancy design without depending on the reliability at the NFV infrastructure (NFVI) layer (Fig. 1). On a cloud native application, multiple microservice instances can be distributed on different DCs according to service requirements. This can achieve geographical disaster tolerance and further enhance application robustness.

● Simplified O&M to improve operation efficiency: Traditionally, a core network is operated, maintained, or upgraded on an NE basis. To avoid an upgrade failure, it is necessary to perform complex service migration and fallback operations. A cloud native application can be upgraded on a microservice basis—a smaller granularity than an NE. A microservice is characterized by high cohesion and low coupling that can reduce impact on other microservices. When there are multiple microservice instances, some of them can be grayscale-upgraded to verify the validity of a new version. If any problem is found, a quick fallback operation can be implemented to remove the influence.

Meeting 5G Service Requirements

● Flexible deployment: NEs are flexibly deployed in a 5G core network. Different parts of an NE can be distributed as required in different locations such as core DCs, edge DCs, or access DCs. In the case of ultra-reliable low latency communications (URLLC), multiple microservice instances responsible for message forwarding can be deployed near the service network and base stations to meet strict time delay requirements. In the case of massive IoT connections, microservice instances responsible for control-plane and message forwarding can be deployed on a core DC according to the low traffic feature.

● Meeting service requirements in multiple scenarios: Network slicing, an essential feature of a 5G core network, can meet the requirements in different network scenarios. In the top-level design of network slicing, operators can flexibly assemble different microservice types or component libraries and work out personalized network slices according to actual service requirements. Different network slices are logically isolated from each other and have no influence on each other. The different network slices can use different SLAs, and different SLAs have different resource allocation algorithms and redundancy. All this helps to achieve the most cost-effective utilization of infrastructure resources.

Conclusion

Cloud native has the advantages of effectiveness, flexibility, and openness over conventional network architectures. It can meet the need of 5G software architecture, and has become the best choice for operators to add or replace their core network devices.